45 machine learning noisy labels

Home – Toronto Machine Learning His work on Multitask Learning helped create interest in a subfield of machine learning called Transfer Learning. Rich received an NSF CAREER Award in 2004 (for Meta Clustering), best paper awards in 2005 (with Alex Niculescu-Mizil), 2007 (with Daria Sorokina), and 2014 (with Todd Kulesza, Saleema Amershi, Danyel Fisher, and Denis Charles), and co-chaired KDD in … PDF Meta Label Correction for Noisy Label Learning the noisy label is only dependent on the true label and is independent of the data itself (Hendrycks et al. 2018). In this paper, we adopt label correction to address the prob-lem of learning with noisy labels, from a meta-learning per-spective. We term our method meta label correction (MLC). Specifically, we view the label correction ...

Using Noisy Labels to Train Deep Learning Models on Satellite Imagery The goal of the project was to detect buildings in satellite imagery using a semantic segmentation model. We trained the model using labels extracted from Open Street Map (OSM), which is an open source, crowd-sourced map of the world. The labels generated from OSM contain noise — some buildings are missing, and others are poorly aligned with ...

Machine learning noisy labels

Towards harnessing feature embedding for robust learning with noisy labels Given the noisily labeled training data, robust learning methods are required, such that the resulting DNNs can predict true labels. In the literature, existing works can generally be attributed to two categories: (1) statistical learning-based methods and (2) model prediction-based methods. Noisy Labels: Theoretical Approaches/Empirical Studies We demonstrate that several proposed learning-with-noisy-labels solutions in the literature relate closely to negative label smoothing (NLS), which defines as using a negative weight to combine the hard and soft labels. We unify (positive) LS and NLS into GLS, and provide understandings for the properties of GLS when learning with noisy labels. Machine learning with label and data noise - GitHub Machine learning with label and data noise. Image classification experiments on machine learning problems based on PyTorch. Table of Contents. Installation; Usage; License; Contributing; Questions; Installation. Clone this repository.

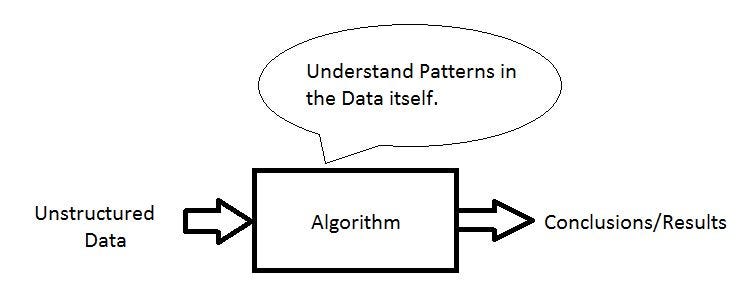

Machine learning noisy labels. How to Become a Machine Learning Engineer: 15 Steps - wikiHow 05.03.2021 · Machine Learning from Stanford, an introductory class focused on breaking down complex concepts related to the field. Learning from Data from Caltech, an introductory class focused on mathematical theory and algorithmic application. Practical Machine Learning from Johns Hopkins University, a class focused on data prediction. How to Improve Deep Learning Model Robustness by Adding Noise Keras supports the addition of noise to models via the GaussianNoise layer. This is a layer that will add noise to inputs of a given shape. The noise has a mean of zero and requires that a standard deviation of the noise be specified as a parameter. For example: 1 2 3 4 # import noise layer from keras.layers import GaussianNoise Machine learning systems design - Chip Huyen When considering machine learning models, don't forget that non-deep learning models exist. Deep learning models are often expensive to train and hard to explain. Most of the time, in production, they are only useful if their performance is unquestionably superior. For example, for the task of classification, before using a transformer-based model with 300 million parameters, … Machine learning - Wikipedia Machine learning (ML) is a field of ... Some of the training examples are missing training labels, yet many machine-learning researchers have found that unlabeled data, when used in conjunction with a small amount of labeled data, can produce a considerable improvement in learning accuracy. In weakly supervised learning, the training labels are noisy, limited, or …

[P] Noisy Labels and Label Smoothing : MachineLearning - reddit It's safe to say it has significant label noise. Another thing to consider is things like dense prediction of things such as semantic classes or boundaries for pixels over videos or images. By their very nature classes may be subjective, and different people may label with different acuity, add to this the class imbalance problem. level 1 A Gentle Introduction to Bayes Theorem for Machine Learning 04.12.2019 · Bayes Theorem provides a principled way for calculating a conditional probability. It is a deceptively simple calculation, although it can be used to easily calculate the conditional probability of events where intuition often fails. Although it is a powerful tool in the field of probability, Bayes Theorem is also widely used in the field of machine learning. PDF Cost-Sensitive Learning with Noisy Labels Keywords: class-conditional label noise, statistical consistency, cost-sensitive learning 1. Introduction Learning from noisy training data is a problem of theoretical as well as practical interest in machine learning. In many applications such as learning to classify images, it is often the case that the labels are noisy. Deep learning with noisy labels: Exploring techniques and remedies in ... Deep learning with noisy labels: Exploring techniques and remedies in medical image analysis Abstract Supervised training of deep learning models requires large labeled datasets. There is a growing interest in obtaining such datasets for medical image analysis applications. However, the impact of label noise has not received sufficient attention.

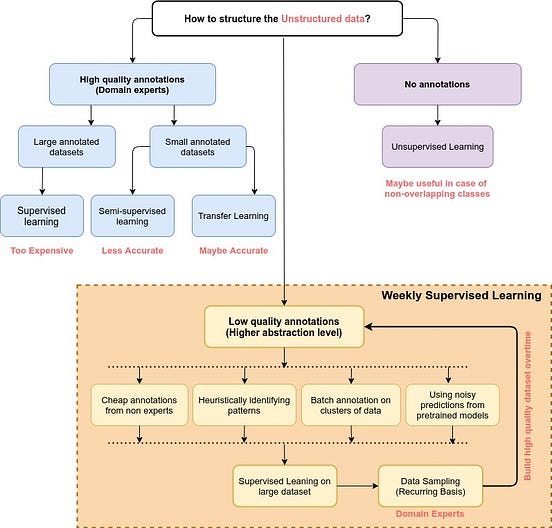

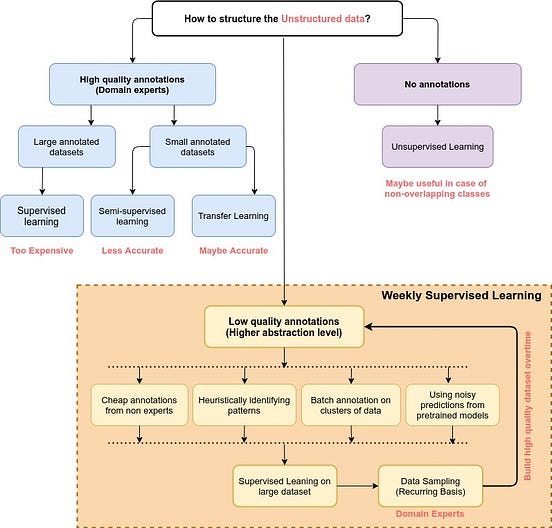

Active label cleaning for improved dataset quality under ... - Nature Imperfections in data annotation, known as label noise, are detrimental to the training of machine learning models and have a confounding effect on the assessment of model performance.... How noisy is your dataset? Sample and weight training samples to ... Second, the label noisy stands for a dataset crawled (for example, by icrawler using keywords) ... When training a machine learning model, due to the limited capacity of computer memory, the set ... › pmc › articlesPreparing Medical Imaging Data for Machine Learning - PMC Feb 18, 2020 · Fully annotated data sets are needed for supervised learning, whereas semisupervised learning uses a combination of annotated and unannotated images to train an algorithm (67,68). Semisupervised learning may allow for a limited number of annotated cases; however, large data sets of unannotated images are still needed. Machine Learning and Data Mining Lecture Notes 1.1 Types of Machine Learning Some of the main types of machine learning are: 1. Supervised Learning, in which the training data is labeled with the correct answers, e.g., “spam” or “ham.” The two most common types of supervised lear ning are classification (where the outputs are discrete labels, as in spam filtering) and regression ...

Weakly Supervised Learning: Classification with limited annotation capacity | by Ved Vasu Sharma ...

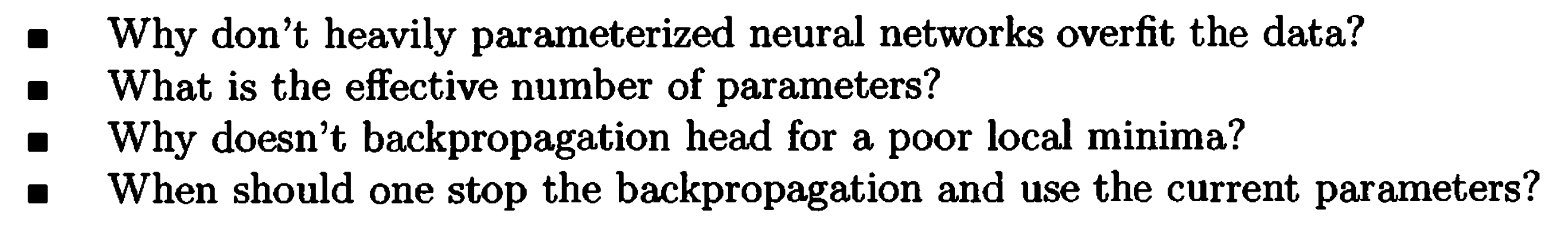

Learning from Noisy Labels with Deep Neural Networks: A Survey As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels (robust training) is becoming an important task in modern deep learning applications. In this survey, we first describe the problem of learning with label noise from a supervised learning perspective.

github.com › Advances-in-Label-Noise-LearningGitHub - weijiaheng/Advances-in-Label-Noise-Learning: A ... Jun 15, 2022 · Learning from Noisy Labels via Dynamic Loss Thresholding. Evaluating Multi-label Classifiers with Noisy Labels. Self-Supervised Noisy Label Learning for Source-Free Unsupervised Domain Adaptation. Transform consistency for learning with noisy labels. Learning to Combat Noisy Labels via Classification Margins.

An Introduction to Classification Using Mislabeled Data The basic steps are: train a bunch of classifiers using a subset of training data, predict the labels of the rest of the data using them, and then the percentage of classifiers that failed to correctly predict a sample's given label is the probability that the sample is mislabeled.

Noisy Labels in Remote Sensing Annotating RS images with multi-labels at large-scale to drive DL studies is time consuming, complex, and costly in operational scenarios. To address this issue, existing thematic products (e.g., Corine Land-Cover map) can be used, however the land-use and land-cover labels through these products can be incomplete and noisy. Handling data with incomplete and noisy labels may result in ...

subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2021-IJCAI - Towards Understanding Deep Learning from Noisy Labels with Small-Loss Criterion. 2022-WSDM - Towards Robust Graph Neural Networks for Noisy Graphs with Sparse Labels. 2022-Arxiv - Multi-class Label Noise Learning via Loss Decomposition and Centroid Estimation.

Different types of Machine learning and their types. | by Madhu Sanjeevi ( Mady ) | Deep Math ...

linkedin-skill-assessments-quizzes/machine-learning-quiz.md … 04.06.2022 · Machine Learning Q1. You are part of data science team that is working for a national fast-food chain. You create a simple report that shows trend: Customers who visit the store more often and buy smaller meals spend more than customers who visit less frequently and buy larger meals.

– Toronto Machine Learning His work on Multitask Learning helped create interest in a subfield of machine learning called Transfer Learning. Rich received an NSF CAREER Award in 2004 (for Meta Clustering), best paper awards in 2005 (with Alex Niculescu-Mizil), 2007 (with Daria Sorokina), and 2014 (with Todd Kulesza, Saleema Amershi, Danyel Fisher, and Denis Charles), and ...

github.com › cleanlab › cleanlabGitHub - cleanlab/cleanlab: The standard data-centric AI ... # Generate noisy labels using the noise_marix. Guarantees exact amount of noise in labels. from cleanlab. benchmarking. noise_generation import generate_noisy_labels s_noisy_labels = generate_noisy_labels (y_hidden_actual_labels, noise_matrix) # This package is a full of other useful methods for learning with noisy labels.

Post a Comment for "45 machine learning noisy labels"